That is an opinion editorial by Aleksandar Svetski, founding father of The Bitcoin Instances and The Amber App and creator of “The UnCommunist Manifesto,” “Genuine Intelligence” and the upcoming “Bushido Of Bitcoin.”

The world is altering earlier than our very eyes. Synthetic intelligence (AI) is a paradigm-shifting technological breakthrough, however in all probability not for the explanations you may suppose or think about.

You’ve in all probability heard one thing alongside the traces of, “Synthetic basic intelligence (AGI) is across the nook,” or, “Now that language is solved, the following step is aware AI.”

Properly… I’m right here to inform you that these ideas are each crimson herrings. They’re both the naive delusions of technologists who imagine God is within the circuits, or the deliberate incitement of concern and hysteria by extra malevolent folks with ulterior motives.

I don’t suppose AGI is a risk or that we’ve got an “AI security downside,” or that we’re across the nook from some singularity with machines.

However…

I do imagine this technological paradigm shift poses a major risk to humanity — which is the truth is, concerning the solely factor I can considerably agree on with the mainstream — however for utterly completely different causes.

To be taught what they’re, let’s first attempt to perceive what’s actually taking place right here.

Introducing… The Stochastic Parrot!

Know-how is an amplifier. It makes the great higher, and the dangerous worse.

Simply as a hammer is know-how that can be utilized to construct a home or beat somebody over the top, computer systems can be utilized to doc concepts that change the world, or they can be utilized to function central financial institution digital currencies (CDBCs) that enslave you into loopy, communist cat women working on the European Central Financial institution.

The identical goes for AI. It’s a software. It’s a know-how. It’s not a brand new lifeform, regardless of what the lonely nerds who are calling for progress to close down so desperately need to imagine.

What makes generative AI so fascinating isn’t that it’s sentient, however that it’s the primary time in our historical past that we’re “talking” or speaking with one thing apart from a human being, in a coherent vogue. The closest we’ve been to that earlier than this level has been with… parrots.

Sure: parrots!

You’ll be able to prepare a parrot to type of speak and speak again, and you’ll type of perceive it, however as a result of we all know it’s probably not a human and doesn’t actually perceive something, we’re not so impressed.

However generative AI… nicely, that’s a unique story. We’ve been acquainted with it for six months now (within the mainstream) and we’ve got no actual thought the way it works beneath the hood. We sort some phrases, and it responds like that annoying, politically-correct, midwit nerd who you understand from class… or your common Netflix present.

Actually, you’ve in all probability even spoken with somebody like this throughout assist calls to Reserving.com, or another service during which you’ve needed to dial in or net chat. As such, you’re instantly shocked by the responses.

“Holy shit,” you inform your self. “This factor speaks like an actual particular person!”

The English is immaculate. No spelling errors. Sentences make sense. It’s not solely grammatically correct, however semantically so, too.

Holy shit! It have to be alive!

Little do you notice that you’re chatting with a highly-sophisticated, stochastic parrot. Because it seems, language is a bit more rules-based than what all of us thought, and likelihood engines can really do a wonderful job of emulating intelligence by way of the body or conduit of language.

The legislation of huge numbers strikes once more, and math achieves one other victory!

However… what does this imply? What the hell is my level?

That this isn’t helpful? That it’s proof it’s not a path to AGI?

Not essentially, on each counts.

There’s a lot of utility in such a software. Actually, the best utility in all probability lies in its utility as “MOT,” or “Midwit Obsolescence Know-how.” Woke journalists and the numerous “content material creators” who’ve for years been speaking so much however saying nothing, are actually like dinosaurs watching the comet incinerate the whole lot round them. It’s an exquisite factor. Life wins once more.

After all, these instruments are additionally nice for ideating, coding sooner, performing some high-level studying, and many others.

However from an AGI and consciousness standpoint, who is aware of? There mayyyyyyyyyyy be a pathway there, however my spidey sense tells me we’re approach off, so I’m not holding my breath. I feel consciousness is a lot extra complicated, and to suppose we’ve conjured it up with likelihood machines is a few unusual mix of ignorant, smug, naive and… nicely… empty.

So, what the hell is my downside and what’s the danger?

Enter The Age Of The LUI

Keep in mind what I stated about instruments.

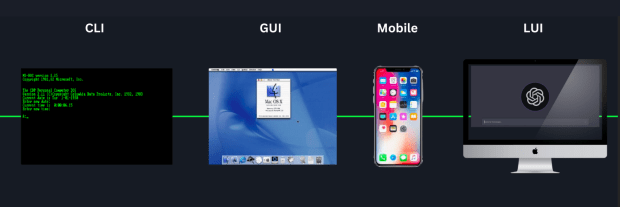

Computer systems are arguably probably the most highly effective software mankind has constructed. And computer systems have gone by way of the next evolution:

- Punch playing cards

- Command line

- Graphical person interface, i.e., level and click on

- Cell, i.e., thumbs and tapping

And now, we’re shifting into the age of the LUI, or “Language Consumer Interface.”

This is the large paradigm shift. It’s not AGI, however LUI. Transferring ahead, each app we work together with could have a conversational interface, and we’ll now not be restricted by the bandwidth of how briskly our fingers can faucet on keys or screens.

Talking “language” is orders of magnitude sooner than typing and tapping. Considering might be one other degree larger, however I’m not placing any electrodes into my head anytime quickly. Actually, LUIs in all probability out of date the necessity for Neuralink-type tech as a result of the dangers related to implanting chips into your mind will outweigh any marginal profit over simply talking.

In any case, this decade we’ll go from tapping on graphical person interfaces, to speaking to our apps.

And therein lies the hazard.

In the identical approach Google at present determines what we see in searches, and Twitter, Fb, Tik Tok and Instagram all “feed us” by way of their feeds; generative AI will tomorrow decide the solutions to each query we’ve got.

The display not solely turns into the lens by way of which you ingest the whole lot concerning the world. The display turns into your mannequin of the world.

Mark Bisone wrote a unbelievable article about this just lately, which I urge you to learn:

“The issue of ‘screens’ is definitely a really previous one. In some ways it goes again to Plato’s cave, and maybe is so deeply embedded within the human situation that it precedes written languages. That’s as a result of after we discuss a display, we’re actually speaking concerning the transmission of an illusory mannequin in an editorialized kind.

“The trick works like this: You might be offered with the picture of a factor (and today, with the sound of it), which its presenter both explicitly tells you or strongly implies is a window to the Actual. The shadow and the shape are the identical, in different phrases, and the previous is to be trusted as a lot as any fragment of actuality that you could immediately observe together with your sensory organs.”

And, for these pondering that “this gained’t occur for some time,” nicely listed below are the bumbling fools making a great try at it.

The ‘Nice Homogenization’

Think about each query you ask, each picture you request, each video you conjure up, each bit of information you search, being returned in such a approach that’s deemed “secure,” “accountable” or “acceptable” by some faceless “security police.”

Think about each bit of knowledge you eat has been remodeled into some lukewarm, center model of the reality, that each opinion you ask for isn’t actually an opinion or a viewpoint, however some inoffensive, apologetic response that doesn’t really inform you something (that is the benign, annoying model) or worse, is a few ideology wrapped in a response in order that the whole lot you understand turns into some variation of what the producers of stated “secure AI” need you to suppose and know.

Think about you had trendy Disney characters, like these clowns from “The Eternals” film, as your ever-present mental assistants. It might make you “dumb squared.”

“The UnCommunist Manifesto” outlined the utopian communist dream because the grand homogenization of man:

If solely everybody had been a collection of numbers on a spreadsheet, or automatons with the identical opinion, it could be a lot simpler to have paradise on earth. You would ration out simply sufficient for everybody, after which we’d be all equally depressing proletariats.

That is like George Orwell’s thought police crossed with “Inception,” as a result of each query you had can be completely captured and monitored, and each response from the AI may incept an ideology in your thoughts. Actually, when you concentrate on it, that’s what info does. It vegetation seeds in your thoughts.

That is why you want a various set of concepts within the minds of males! You desire a flourishing rainforest in your thoughts, not some mono-crop area of wheat, with deteriorated soil, that’s inclined to climate and bugs, and utterly depending on Monsanto (or Open AI or Pfizer) for its survival. You need your thoughts to flourish and for that you want idea-versity.

This was the promise of the web. A spot the place anybody can say something. The web has been a power for good, however it’s beneath assault. Whether or not that’s been the de-anonymization of social profiles like these on Twitter and Fb, and the creeping KYC throughout all types of on-line platforms, by way of to the algorithmic vomit that’s spewed forth from the platforms themselves. We tasted that in all its glory from 2020. And it appears to be solely getting worse.

The push by WEF-like organizations to institute KYC for on-line identities, and tie it to a CBDC and your iris is one different, nevertheless it’s a bit overt and express. After the pushback on medical experimentation of late, such a transfer could also be more durable to drag off. A neater transfer might be to permit LUIs to take over (as they’ll, as a result of they’re a superior person expertise) and within the meantime create an “AI security council” that can institute “security” filters on all main massive language fashions (LLMs).

Don’t imagine me? Our G7 overlords are discussing it already.

In the present day, the net continues to be made up of webpages, and for those who’re curious sufficient, you could find the deep, darkish corners and crevices of dissidence. You’ll be able to nonetheless surf the net. Largely. However when the whole lot turns into accessible solely by way of these fashions, you’re not browsing something anymore. You’re merely being given a synthesis of a response that has been run by way of all the required filters and censors.

There’ll in all probability be a sprinkle of fact someplace in there, however it will likely be wrapped up in a lot “security” that 99.9% of individuals gained’t hear or know of it. The reality will turn into that which the mannequin says it’s.

I’m unsure what occurs to a lot of the web when discoverability of knowledge essentially transforms. I can think about that, as most functions transition to some type of language interface, it’s going to be very arduous to seek out issues that the “portal” you’re utilizing doesn’t deem secure or accredited.

One may, after all, make the argument that in the identical approach you want the tenacity and curiosity to seek out the dissident crevices on the net, you’ll have to be taught to immediate and hack your approach into higher solutions on these platforms.

And which may be true, nevertheless it appears to me that for every time you discover one thing “unsafe,” the route shall be patched or blocked.

You would then argue that “this might backfire on them, by diminishing the utility of the software.”

And as soon as once more, I’d in all probability agree. In a free market, such stupidity would make approach for higher instruments.

However after all, the free market is turning into a factor of the previous. What we’re seeing with these hysterical makes an attempt to push for “security” is that they’re both knowingly or unknowingly paving the best way for squashing doable options.

In creating “security” committees that “regulate” these platforms (learn: regulate speech), new fashions that aren’t run by way of such “security or toxicity filters” is not going to be obtainable for shopper utilization, or they might be made unlawful, or arduous to find. How many individuals nonetheless use Tor? Or DuckDuckGo?

And for those who suppose this isn’t taking place, right here’s some info on the present toxicity filters that almost all LLMs already plug into. It’s solely a matter of time earlier than such filters turn into like KYC mandates on monetary functions. A brand new compliance appendage, strapped onto language fashions like tits on a bull.

Regardless of the counter-argument to this homogenization try, each really assist my level that we have to construct options, and we have to start that course of now.

For many who nonetheless are likely to imagine that AGI is across the nook and that LLMs are a major step in that path, by all means, you’re free to imagine what you need, however that doesn’t negate the purpose of this essay.

If language is the brand new “display” and all of the language we see or hear have to be run by way of accredited filters, the knowledge we eat, the best way we be taught, the very ideas we’ve got, will all be narrowed into a really small Overton window.

I feel that’s a large threat for humanity.

We’ve turn into dumb sufficient with social media algorithms serving us what the platforms suppose we must always know. And once they wished to activate the hysteria, it was simple. Language person interfaces are social media occasions 100.

Think about what they will do with that, the following time a so-called “disaster” hits?

It gained’t be fairly.

{The marketplace} of concepts is critical to a wholesome and practical society. That’s what I would like.

Their narrowing of thought gained’t work long run, as a result of it’s anti-life. In the long run, it’s going to fail, identical to each different try and bottle up fact and ignore it. However every try comes with pointless harm, ache, loss and disaster. That’s what I’m making an attempt to keep away from and assist ring the bell for.

What To Do About All This?

If we’re not proactive right here, this entire AI revolution may turn into the “nice homogenization.” To keep away from that, we’ve got to do two important issues:

- Push again towards the “AI security” narratives: These may seem like security committees on the floor, however while you dig somewhat deeper, you notice they’re speech and thought regulators.

- Construct options, now: Construct many and open supply them. The earlier we do that, and the earlier they will run extra domestically, the higher likelihood we’ve got to keep away from a world during which the whole lot traits towards homogenization.

If we do that, we are able to have a world with actual range — not the woke type of bullshit. I imply range of thought, range of concepts, range of viewpoints and a real market of concepts.

An idea-versity. What the unique promise of the web was. And never certain by the low bandwidth of typing and tapping. Couple that with Bitcoin, the web of cash, and you’ve got the components for a vivid new future.

That is what the crew and I are doing at Laier Two Labs. We’re constructing smaller, slim fashions that individuals can use as substitutes to those massive language fashions.

We’re going to open supply all our fashions, and in time, purpose to have them be compact sufficient to run domestically by yourself machines, whereas retaining a level of depth, character and distinctive bias to be used when and the place you want it most.

We are going to announce our first mannequin within the coming weeks. The aim is to make it the go-to mannequin for a subject and business I maintain very expensive to my coronary heart: Bitcoin. I additionally imagine it’s right here that we should begin to construct a set of different AI fashions and instruments.

I’ll unveil it on the following weblog. Till then.

This can be a visitor publish by Aleksandar Svetski, founding father of The Bitcoin Instances and The Amber App, creator of “The UnCommunist Manifesto,” “Genuine Intelligence” and the up-coming “Bushido Of Bitcoin.” Opinions expressed are totally their very own and don’t essentially mirror these of BTC Inc or Bitcoin Journal.

![Methods to Purchase DeFi Pulse Index on CoinStats [The Ultimate Guide 2022]](https://bitrrency.com/wp-content/uploads/2022/05/DeFi_Pulse_og-100x70.png)